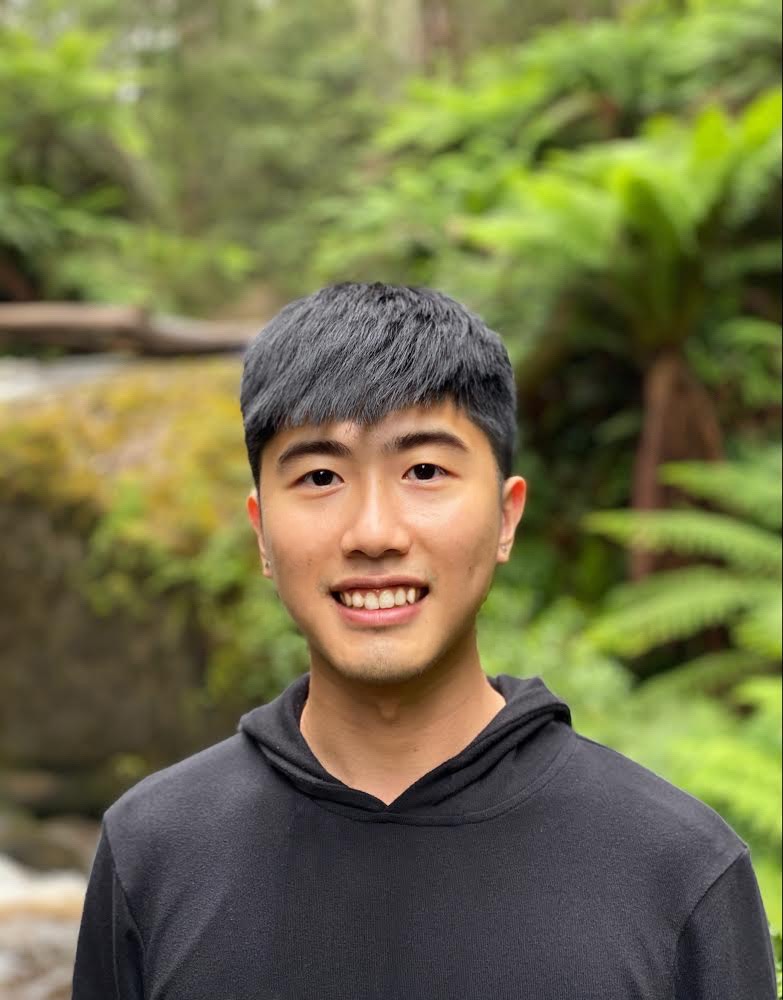

Jinqiang Yu

Machine Learning Engineering at TikTok

Ph.D at Monash University

Email: jinqiang.yuuu AT gmail.com

Research Interests

LLM, Multimodal LLM, Explainable AI, Machine Learning, GenAI, NLP, CV,

Publications

-

From Formal Boosted Tree Explanations to Interpretable Rule Sets 29th International Conference on Principles and Practice of Constraint Programming (CP), 2023

The rapid rise of Artificial Intelligence (AI) and Machine Learning (ML) has invoked the need for explainable AI (XAI). One of the most prominent approaches to XAI is to train rule-based ML models, e.g. decision trees, lists and sets, that are deemed interpretable due to their transparent nature. Recent years have witnessed a large body of work in the area of constraints- and reasoning-based approaches to the inference of interpretable models, in particular decision sets (DSes). Despite being shown to outperform heuristic approaches in terms of accuracy, most of them suffer from scalability issues and often fail to handle large training data, in which case no solution is offered. Motivated by this limitation and the success of gradient boosted trees, we propose a novel anytime approach to producing DSes that are both accurate and interpretable. The approach makes use of the concept of a generalized formal explanation and builds on the recent advances in formal explainability of gradient boosted trees. Experimental results obtained on a wide range of datasets, demonstrate that our approach produces DSes that more accurate than those of the state-of-the-art algorithms and comparable with them in terms of explanation size.

[PDF] -

Eliminating The Impossible, Whatever Remains Must Be True 37th AAAI Conference on Artificial Intelligence (AAAI), pp. 4123–4131, 2023

The rise of AI methods to make predictions and decisions has led to a pressing need for more explainable artificial intelligence (XAI) methods. One common approach for XAI is to produce a post-hoc explanation, explaining why a black box ML model made a certain prediction. Formal approaches to post-hoc explanations provide succinct reasons for why a prediction was made, as well as why not another prediction was made. But these approaches assume that features are independent and uniformly distributed. While this means that “why” explanations are correct, they may be longer than required. It also means the “why not” explanations may be suspect as the counterexamples they rely on may not be meaningful. In this paper, we show how one can apply background knowledge to give more succinct “why” formal explanations, that are presumably easier to interpret by humans, and give more accurate “why not” explanations. In addition, we show how to use existing rule induction techniques to efficiently extract background information from a dataset.

[arXiv] [PDF] -

Learning Optimal Decision Sets and Lists with SAT Journal of Artificial Intelligence Research, 72, 1251-1279, 2021

Decision sets and decision lists are two of the most easily explainable machine learning models. Given the renewed emphasis on explainable machine learning decisions, both of these machine learning models are becoming increasingly attractive, as they combine small size and clear explainability. In this paper, we define size as the total number of literals in the SAT encoding of these rule-based models as opposed to earlier work that concentrates on the number of rules. In this paper, we develop approaches to computing minimum-size “perfect” decision sets and decision lists, which are perfectly accurate on the training data, and minimal in size, making use of modern SAT solving technology. We also provide a new method for determining optimal sparse alternatives, which trade off size and accuracy. The experiments in this paper demonstrate that the optimal decision sets computed by the SAT-based approach are comparable with the best heuristic methods, but much more succinct, and thus, more explainable. We contrast the size and test accuracy of optimal decisions lists versus optimal decision sets, as well as other state-of-the-art methods for determining optimal decision lists. Finally, we examine the size of average explanations generated by decision sets and decision lists.

[HTML] [PDF] -

Optimal Decision Lists Using SAT CoRR abs/2010.09919, 2020

Decision lists are one of the most easily explainable machine learning models. Given the renewed emphasis on explainable machine learning decisions, this machine learning model is increasingly attractive, combining small size and clear explainability. In this paper, we show for the first time how to construct optimal “perfect” decision lists which are perfectly accurate on the training data, and minimal in size, making use of modern SAT solving technology. We also give a new method for determining optimal sparse decision lists, which trade off size and accuracy. We contrast the size and test accuracy of optimal decisions lists versus optimal decision sets, as well as other state-of-the-art methods for determining optimal decision lists. We also examine the size of average explanations generated by decision sets and decision lists.

[HTML] [PDF] -

Computing Optimal Decision Sets with SAT 26th International Conference on Principles and Practice of Constraint Programming (CP 2020)

As machine learning is increasingly used to help make decisions, there is a demand for these decisions to be explainable. Arguably, the most explainable machine learning models use decision rules. This paper focuses on decision sets, a type of model with unordered rules, which explains each prediction with a single rule. In order to be easy for humans to understand, these rules must be concise. Earlier work on generating optimal decision sets first minimizes the number of rules, and then minimizes the number of literals, but the resulting rules can often be very large. Here we consider a better measure, namely the total size of the decision set in terms of literals. So we are not driven to a small set of rules which require a large number of literals. We provide the first approach to determine minimum-size decision sets that achieve minimum empirical risk and then investigate sparse alternatives where we trade accuracy for size. By finding optimal solutions we show we can build decision set classifiers that are almost as accurate as the best heuristic methods, but far more concise, and hence more explainable.

Lecture Notes in Computer Science, vol. 12333, pp. 952–970, 2020 [arXiv] [HTML] [PDF]

Education

- Feb 2021 - Aug 2024: PhD, Department of Data Science & AI, Faculty of IT, Monash University

- Mar 2019 - Dec 2020: Master of Information Technology, Monash University

Awards

- Our paper “Computing Optimal Decision Sets with SAT” is selected for the Best Paper Award for the CP/ML Track CP 2020!

- Monash Faculty of Information Technology International Postgraduate Research Scholarship

- Monash Faculty of Information Technology Research Scholarship

Skills

- Proficient in Python, Java, R, SQL

- Familiar with C/C++, Spark, MongoDB, MATLAB, MiniZinc, and machine/deep learning models e.g. neural network, transformer, LLM, gradient boosted tree.

- Experience with data analysis, NLP, CV, and diverse libraries such as pandas, numpy, scikit‑learn, TensorFlow, PyTorch, and PySAT.